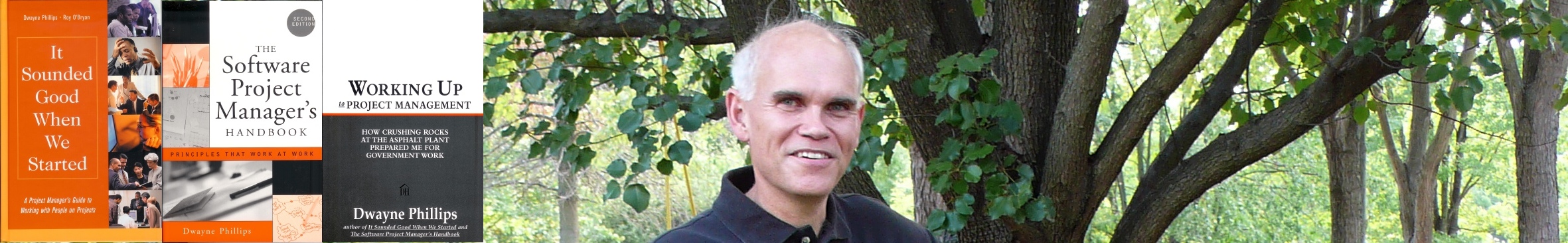

by Dwayne Phillips

These chattering bots can “generate” all sorts of information. Humans must do more and better to keep their paying jobs.

I recently sat in a lecture about some worthwhile topic. The lecturer started with, “There are eight aspects to this topic that we will cover in the next ten sessions.” The lecturer listed those eight aspects on the PowerPoint screen and described each in some detail.

One of my fellow lecture attenders pulled me aside afterwards. While sitting bored in the lecture, he had asked ChatGPT, “What are the major aspects of this topic?” ChatGPT immediately spit out the same eight things our learned lecturer listed. Further prompts produced better summaries and details of the eight topics than the lecturer produced. (There are many other systems that do the same, but I will use “ChatGPT” in this short essay.)

Our lecturer could not spell ChatGPT let alone use it. Hence, his lecture was the result of long hours of hard work, i.e., someone paid the expert lecturer lots of money to do something that a novice could do in 15 minutes with ChatGPT.

And hence we arrive at higher expectations for human intelligence and human experts.

“ChatGPT could have pumped out that material. I am paying you money to do much better than that.”

I have yet to hear the above statement. That is an unfortunate indictment of ignorance on those who hire human experts. It is also a call to action for human experts everywhere. Laymen can produce accurate information on many topics. If a human expert wants to continue to be paid for expertise, its time to up our game.

Start lectures with, “You could pull much information on this topic from ChatGPT. That includes these eight main aspects. I point you to the accompanying handout for such. Now we will delve into material that ChatGPT doesn’t ‘know’ yet.”

New tools are valuable. They are also forcing experts to do better. Let’s do better.

0 responses so far ↓

There are no comments yet...Kick things off by filling out the form below.

Leave a Comment